|

|

|

|

|

If your browser labels this site "Not Secure," don't worry. You are not typing personal

information here, so security is not needed. Click here

for more explanation.

|

|

2019

April

30

|

Integrated flux nebula in Ursa Major

Hang on for some professional astronomy, with links to the academic literature...

Traditionally, amateur astrophotography has lagged so far behind

professional work that we spend our time photographing objects discovered

more than a century ago. But here is an exception. This picture shows

something whose definitive discovery was announced in 1976, although there

were brief indications of it 11 years earlier.

When I was born, nobody knew it was there.

Stack of fifteen 2-minute exposures, Hα-modified Nikon D5500,

Nikon 180/2.8 ED AI lens at f/4, and Celestron AVX mount, taken at Deerlick on

the night of the 27th.

In the middle of the picture are the galaxies M81 and M82, substantially overexposed.

But at the top right is a big reflection nebula first described in any detail

by Allan Sandage

in 1976. (Click to see the article.)

It is listed as group 19 (objects at 147.56 36.88 and 147.82 37.57,

now known as LBN 692 and 695)

in Beverly Lynds' catalog

of bright nebulae (1965),

but she apparently saw only faint indications of it on Palomar photographic plates.

Most reflection nebulae reflect the light of stars embedded in them.

Not this one! It's so big and sprawling that it's obviously not illuminated by a

single star — if it were, it would be very bright around that star.

No; this is an integrated flux nebula (IFN), as Sandage clearly pointed out.

It is lit by the combined light (integrated flux) of a large part of our galaxy.

The concept of integrated flux nebula was broached by

van den Bergh

in his 1966 study of reflection nebulae.

He points out that from earth, our view of the center of our galaxy is blocked by dust.

If we were outside the plane of the galaxy, above it, the dust would not block the view,

and the galactic center would look as bright as a crescent moon.

That's plenty bright enough to make interstellar dust visible.

I hesitate to apply the term IFN to any faint, sprawling nebula.

Often it takes a good bit of research to find out whether a faint nebulosity is lit by

embedded or nearby stars or the integrated flux of much of the galaxy; it could even turn

out to be a faint streamer from a distant galaxy (as part of the material close to M81 was

at one time thought to be). So I prefer the term galactic cirrus for gas and dust

clouds spread loosely throughout our galaxy, a term that comes from infrared astronomy.

I must thank Steve Mandel and Michael Wilson for promoting amateur awareness of IFN and other

faint nebulae, even though I cannot find their web site on line right now.

It is archived at https://web.archive.org/web/20170325074937/http://www.galaxyimages.com/MWCatalogue.html

(catalogue) and https://web.archive.org/web/20170121101638/http://www.galaxyimages.com/UNP_IFNebula.html (pictures).

They call this one the Volcano Nebula.

(Incidentally, a nonexistent person, "Steve Mandel-Wilson," has gotten into Wikipedia and numerous

other web sites!)

Also, there are some excellent pictures of galactic cirrus in

Rogelio Bernal Andreo's online gallery [link corrected],

and he has been active in trying to catalogue the faint nebulae that he photographs.

Why didn't we know these nebulae were there?

Mainly because they were almost too faint to detect.

For years we amateurs have probably recorded them faintly and processed them

right out of our pictures, mistaking them for random irregularity in the sensor!

Galactic cirrus was widely recognized as a result of the Infrared Astronomical Satellite (IRAS)

(see for example this paper).

Of course, our sensors and image processing have improved too.

It's no accident that I photographed IFN with a new Sony sensor and not a camera

made in 2004, or that I did the processing with PixInsight rather than Photoshop.

The discovery of galactic cirrus,

in turn, brings to mind various "ghost stories" from past visual observers at very

dark sites who reported faint luminosity of the sky background, never confirmed by other

observers. These include

Herschel's ghosts,

Hagen's clouds,

and Baxendell's unphotographable nebula

(see also this

and this).

Now that we have good sensors and digital image processing techniques,

it's time to take another look.

Permanent link to this entry

|

2019

April

29

|

A walk through Monoceros

On the evening of April 27 I seized the opportunity to go and take pictures under

(or rather of) the

dark skies of the Deerlick Astronomy Village.

I used the new Nikon D5500 Hα, the AVX mount, and my vintage 180-mm f/2.8 ED AI lens

at f/4.

Here's one of the results:

You're looking at an area of the Milky Way with plenty of interstellar dust.

A long, winding dark (unilluminated) nebula snakes its way down the middle of

the picture.

The two bright glows left and right of center are where the nebulosity is

illuminated by starlight.

The Christmas Tree Cluster (NGC 2264) is the object on the left;

the one on the right, larger and whiter, is NGC 2245.

The reddish patch in the middle of the picture is a more faintly illuminated nebula that is

predominantly hydrogen and glows by fluorescence rather than reflection.

If I'd realized the Rosette Nebula was out of the field at the bottom,

I would have aimed lower.

The picture is reddish overall because I set the color balance to bring out faint

red nebulosity. I think we can say that the new camera works.

Stack of seven 2-minute exposures at ISO 200. Yes, 200; these newer sensors have

greatest dynamic range, and lose no real sensitivity, at the lowest ISO settings.

Permanent link to this entry

|

2019

April

28

|

Remember to set a custom white balance...

[Revised.]

Because it had been modified to respond to more of the red end of the spectrum, my new

Nikon D5500 produced pictures that were all distinctly pinkish.

I figured this came with the modification, and for astrophotography, it isn't a problem

because I always adjust the three color channels individually.

But it did make the D5500 less useful than

my Canon 60Da, which has not only a modified filter but also

modified firmware (by Canon) and takes excellent daytime pictures.

Well... I decided to set a custom color balance in the camera (using the "Measure" option and

having it measure the color of a piece of white paper outdoors in sunlight). Presto, change-o... here's

how much difference it made:

Of course, the color balance still won't perfectly match a conventional

camera because some light rays affect it differently. But it should be good for nature photography,

where the deep red end of the spectrum plays a large role.

Old-timers will remember the difference between Ektachrome (which responds to deep red)

and Vericolor and Sensia, which don't.

Permanent link to this entry

|

2019

April

27

|

Thesis-defense best practices

Adapted from a Facebook post.

It's that time of year — people who are finishing a master's degree or doctorate are

undergoing oral examinations and thesis defenses, facing questions from their faculty

committees.

Everybody wants to have their defense at the last possible date so they have more days to

prepare for it. This creates a high-stress situation for students and faculty, since if even

one member of the faculty committee can't come, the defense can't be held and the student

can't graduate.

Some years ago I presided over a thesis defense while sick as a dog, having actually been at

the emergency room the previous night with a stomach ailment.

If I hadn't been there, the student would not have been able to graduate on time and would

have had to pay fees for another semester.

To manage the risk better, I started insisting on both a scheduled defense date and an alternate

date, with everyone confirmed to be available for both.

That way, one mishap won't cost someone a whole semester. We'd simply move to the alternate date.

This implies, of course, that the student can't quite wait until the last minute. But that is a good thing.

A thesis degree is supposed to be proof of ability to manage a long-term project.

I actually think we should flunk you if you can't do that.

To deal with another difficulty, we amended our student handbook to point out that faculty members

are not obligated to be available outside of the regular academic terms or when away for

academic reasons.

Permanent link to this entry

|

2019

April

23

(Extra)

|

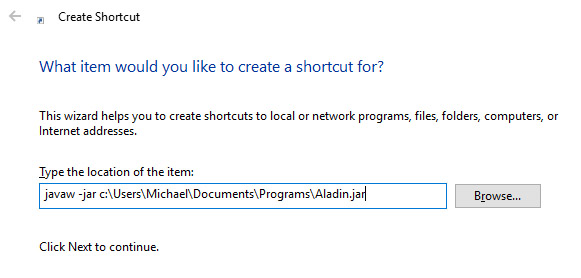

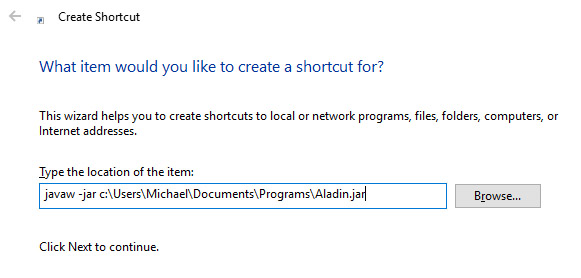

How to run .jar files by right-clicking

(in Windows using OpenJDK)

Sometimes you receive a Java program as a .jar file. You can run it with a command

of the form

java -jar myfile.jar

(using javaw if it's a GUI application).

What if you want to open it by just double-clicking? In some Javas you can reportedly just

tell Windows to associate it with javaw.exe (in the Java bin folder).

With OpenJDK 12 this doesn't work, so here's my workaround.

I created a file named javajar.bat containing the two lines:

start javaw %1

@sleep 10

(I decided I'd like to see the command in a console window,

to confirm that I've launched it, since the actual launch of the .jar file can

take a few seconds; but then the console window closes after 10 seconds.

Your preference may be different. If you don't want to see a console window,

just leave out the second line.)

If you prefer, you can download that file

by clicking here and then unzipping it.

Then I placed javajar.bat in the OpenJDK bin folder and

told Windows to associate .jar files with it ("Open with...").

It has no icon, but it works.

It's annoying how OpenJDK does not seem to be quite ready to use right out of the box.

It works fine, but little bits of setup haven't been done.

I think Oracle is trying to make sure it is a second-class citizen.

Permanent link to this entry

How to pin .jar files to Start

In Windows, you cannot pin a .jar file to Start,

even if it is associated with a launcher as I described above.

What you can do is create a shortcut and pin the shortcut to Start.

The shortcut must contain the full path to the .jar file, preceded by an

appropriate launch command, like this:

Then you can pin the shortcut to Start.

Permanent link to this entry

|

2019

April

23

|

You need a sensor loupe

Pardon the crass commercialism, but a very important (and affordable) camera accessory

for DSLR owners has appeared on the market.

Mine arrived today and immediately paid off.

It's an illuminated magnifier for checking for dust on the sensor of a DSLR.

There are several brands, but I bought the

Micnova one,

which cost about $20. Besides inspecting sensors for dust, you can also use it to examine stamps, coins,

printed matter, small mechanisms, and so forth. Be sure to remove the protective plastic wrap from both sides

of the lens, or you'll think the optical quality is mediocre (though still good enough that you might not realize

anything is wrong!).

When you find dust on the sensor, you can remove it as described here.

DO NOT wipe the sensor with anything other than a special swab; DO NOT use canned compressed gas.

DO use a "rocket" (rubber bulb) to deliver puffs of air

with the camera body facing down; that's usually enough, especially

if you have a loupe and can check your results!

DO make sure the shutter will stay open while you're doing the cleaning;

lock it if possible.

Permanent link to this entry

Blog for camera geeks

I've spent a couple of evenings browsing through a rare find — a photography site that actually

tells me things I wanted to know and had no way to find out.

It's the blog of Roger Cicala

(and occasionally other people)

at Lensrentals.com.

He's even more of a camera geek than I am, and I mean that as a compliment.

That's where I learned that Sigma makes the best lenses (as demonstrated by their

multi-unit tests); saw many repair techniques in action; and saw gruesome pictures

of lenses damaged by various things, including sunlight at an eclipse. Enjoy!

Equal time: BorrowLenses.com also has

a blog, but it's a bit more like

a photography magazine for normal people; still full of good information.

Permanent link to this entry

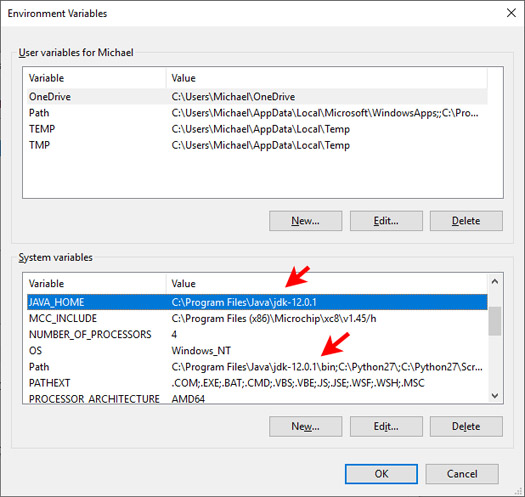

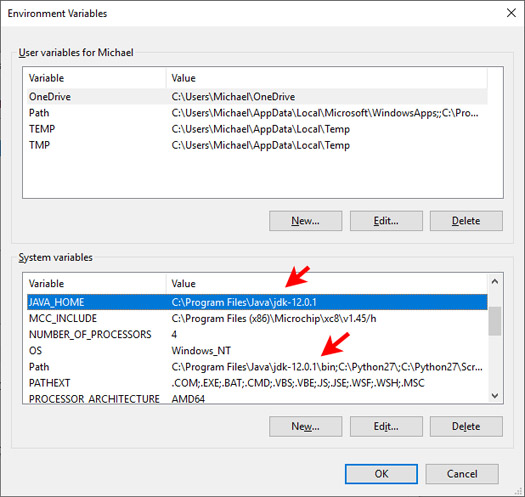

How to install OpenJDK under Windows

(and why you might need to)

Note: None of this is necessary now.

Instead, use the

free version of Java that Oracle provides (not OpenJDK).

If you need to uninstall OpenJDK, undo the steps described below.

Many of us have been greeted this week by warnings that, starting with the latest release,

the traditional Java development and runtime systems from Oracle are no longer free for commercial use.

As I understand it, it's still free for personal use and for software development, but

not for production. Details here.

This matters because, if you run Java programs, you have to have a Java runtime system on your

computer. The Java programs I use most are FireCapture (for astronomical imaging) and Weka (for machine learning).

I think this new change of license terms is going to be hard or impossible to enforce.

Many people will never even hear about it.

The software itself doesn't check whether you're using it for business purposes (how could it?).

I predict almost everyone will just keep getting Java and using it the old way.

Nonetheless, rather than technically violate license terms, I gleefully switched over to Oracle's

free open-source version, OpenJDK. Frankly, I think it may be the more reliable of the two.

And it's the only Java I've had under Linux for some time.

Annoyingly, there is no installer for OpenJDK under Windows. Here's what you have to do:

(0) Uninstall older Java versions from Settings (Apps) or Control Panel (Programs and Features).

To be really thorough, also delete the Oracle Java folders from C:\Program Files and C:\ProgramData.

(1) Click here to download OpenJDK as a zip file and unpack it.

The current version unzips into a folder called jdk-12.0.1, which is the name I'll assume

in these instructions. If you find a different name, make appropriate changes in what follows.

(2) Create a folder called C:\Program Files\Java and move jdk-12.0.1 into it.

(3) Open the control panel for editing system environment variables.

- In Windows 10, open Settings, type "environment" in the search box, and follow the menus.

- In Windows 8, use Control Panel, System, Advanced Settings.

Change the system (not user) environment variables as follows (see the pictures below):

-

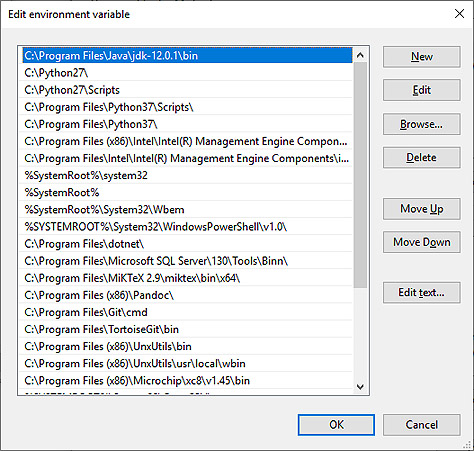

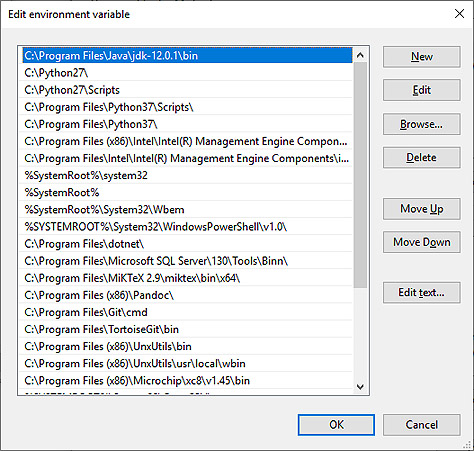

To PATH, add C:\Program Files\Java\jdk-12.0.1\bin and remove any references to Oracle Java.

Under Windows 10, when you edit PATH, it opens up as a box of separate items you can edit and rearrange.

Under earlier versions, be careful because nothing will prevent you from mistyping and

creating a corrupted path.

-

Add the new system variable JAVA_HOME with value

C:\Program Files\Java\jdk-12.0.1 (without bin).

(4) Go to a command prompt and type: java -version

You should get a message saying that OpenJDK 12.0.1 is installed.

If you get a new version of OpenJDK, you have to re-do all this, of course.

Now for the pictures...

Editing system environment variables:

Editing the PATH (Windows 10):

Note: The Aladin astronomy software package does not work if OpenJDK 12.0.1 is the only

version of Java installed. I think the problem is not with OpenJDK but merely that Aladin is

misinterpreting the version number and mistakenly concluding it is unsuitable. I've submitted

a bug report.

Answer: The problem has to do with the .exe packaging of Aladin.

Instead, download the file Aladin.jar and click here to see how to run it.

Permanent link to this entry

|

2019

April

22

|

First light with a new camera

On short notice, the other day I got a really good deal on a

(slightly) secondhand hydrogen-alpha-modified Nikon D5500

from BorrowLenses.com, which offers them for rent but

apparently had too many on hand. The modification was done by

LifePixel and lets in more of the spectrum than the

Canon 60Da modification; it also alters the color balance so that,

unlike the 60Da, the modified D5500 (which I shall call D5500Hα)

cannot be used for daytime color photography.

But the price was right, and I snapped it up.

I'll buy the best, no matter how little it costs.

The camera arrived in better condition than I expected. I thought I was getting an

ex-rental, but this one showed a shutter count of only 107, which leads me to think it was

only tested in-house and never rented out. The description said there was dust trapped under

the filter, but I was only able to find a small trace of what might be dust.

(I think they were simply quoting LifePixel's usual disclaimer that a replaced filter

cannot be guaranteed to be dust-free.) It is serving me well.

[Reprocessed.]

Here is the first astronomical picture I took with it. (Well, except for one single 2-minute

exposure that preceded it, during twilight, to verify guiding and focus.) These are the

same galaxies in Leo that I photographed with the Canon 60Da the other day.

This was taken with a much smaller lens, so the pictures shouldn't be compared directly.

This is a stack of twelve 2-minute exposures at ISO 200 with a Nikkor 180/2.8 ED AI lens

(of venerable age, made around 1990 judging by the serial number) at f/4 on my AVX mount.

Permanent link to this entry

Markarian's Chain again

[Reprocessed.]

Here's another: the same group of galaxies (Markarian's Chain and M87) that I

photographed a few days ago with the same lens and a different

camera body. Conditions were better this time, but I didn't crank up the

contrast as much when processing the picture, so I don't know which one is actually better.

Permanent link to this entry

Am I going over to the dark side?

Those of you know how long I've used and recommended Canon DSLRs may be a bit taken aback

at my acquisition of a second Nikon. The new D5500 has the same Sony sensor as my

D5300, but it has been modified to transmit more of the spectrum, and it is actually slightly

more compact and lighter in weight.

You know that the Nikon D5300 that I bought a 3 years ago

has served me well. You may also know that the first time I tested

a Nikon DSLR for astrophotography

(back in 2005) it performed poorly and

strengthened my devotion to Canon.

What has changed?

It's a matter of sensor technology. I divide DSLRs into three generations (so far):

- First generation: The infancy of DSLRs; less than 10 megapixels; important features such as live focusing

are not there yet. Canon 300D, Nikon D50.

- Second generation: Mature set of camera features; 10 to 20 megapixels; sensors whose maximum dynamic

range is achieved around ISO 400 to 800 and does not improve further at lower settings. Canon 40D-60D, many

mature DSLRs from other makers.

- Third generation: Sensor noise is "ISOless" and dynamic range increases as the ISO setting is turned

down to 200 or even lower; that is, lower ISO always gives you more dynamic range, with little or no plateau.

You can see what I mean by looking at the dynamic range curves at

PhotonsToPhotos.

Click on the link and choose Canon 300D, Canon 60D, Canon 80D, and Nikon D5300

(the latter two are both third-generation).

The third generation is Sony's doing and first caught astrophotographers' attention with the Sony α7S.

But Nikon is making very inexpensive, yet full-featured, DSLRs with excellent Sony sensors in them.

Canon makes its own sensors and has almost caught up.

As you can see from the charts if you clicked on that link, Nikon and Canon are both in the

third generation, but Nikon, slightly more so.

There may be skulduggery involved. In fact, I'm sure there is. The Nikon cameras preprocess the

images in some way that further reduces the noise level. The details of the preprocessing have not

been revealed, but since it benefits astrophotography, we have nothing to lose by it.

The latest Canon generation is almost there — the 200D and 80D in particular — and you

have to remember that their "raw" images are "rawer" than Nikon's, so results should be compared after

full calibration. I can't be totally sure which sensors are better, but the tests I've seen do seem to

show Nikon having a slight edge, even when confounding factors are factored out. Canon may

overtake Nikon any day now, but on the other hand, Sony/Nikon has had the edge for several years.

Another factor affecting my choice is that in the long run, I need good telephoto lenses, and the

competition is no longer between Nikon and Canon. The top lens maker nowadays seems to be Sigma,

outperforming even Zeiss in tests I've seen. And Sigma makes lenses for both Nikon and Canon.

What's more, Nikon lenses can fit on a Canon with an adapter (though not the other way around),

so if there's another Canon in my future, any Nikon lenses I acquire will remain fully useful.

And in the long run, the future is mirrorless. Mirrorless cameras have thinner bodies and take

adapters for all brands of DSLR lenses.

Presently, though, small, cheap DSLRs are the best buy.

I would advise serious photographers (daytime photographers, not just astro) to buy entry-level

DSLRs such as the Nikon D5500 and Canon 200D.

The price is right (typically $500 plus lens). Spend your money on first-rate lenses.

The only reason you would need a "pro" or "semi-pro" camera body is ruggedness. Even the

low-end bodies have the features you need, and they're cheaper and lighter.

Permanent link to this entry

|

2019

April

21

(Extra)

|

PixInsight for artists?

Here are a couple more of my attempts at art photography. In the spring of 2016 I walked around downtown

Athens, Georgia, and took pictures of "ghost signs," faded signs, some of which had been temporarily

made visible by removal of something else.

Most of my pictures weren't very good and serve only to record the location of things to come back to.

But I did get two good ones, the iconic Tanner Lumber Company and a forgotten hotel.

These were taken with an iPhone 5.

[Update] I thought the sign said COMMERCIAL

HOTEL but the letters do not seem to fit in the space. A correspondent suggests COMMERCE but I am

not able to verify that. The Sanborn Fire Insurance Maps for 1913 and 1918 call it the Athenaeum Hotel

(165-175 E. Clayton St.), and earlier maps indicate it was not a hotel in 1908 or earlier.

[Another update] City directories from the 1920s through the 1940s say GRAHAM HOTEL.

If that's what the sign says, there must be a curlecue to the left of the G.

Another possibility is CLAYTON HOTEL, which was across the street and may have eventually occupied

this building. But I still think I see COM at the beginning of the first word.

Indeed, of the possibilities raised so far, COMMERCE is the only one that seems to fit completely,

and I am puzzled that I can't find any historical record of it.

[Update:] COMMERCE HOTEL is confirmed by someone who saw the same name painted on a door inside the

building. Why it didn't make it into city directories is unclear.

Where PixInsight comes in is that I used its HDR Multiscale Transform to darken the highlights and brighten

the shadows to give a more painting-like look. I also did routine processing of several kinds

in Photoshop.

Permanent link to this entry

|

2019

April

21

|

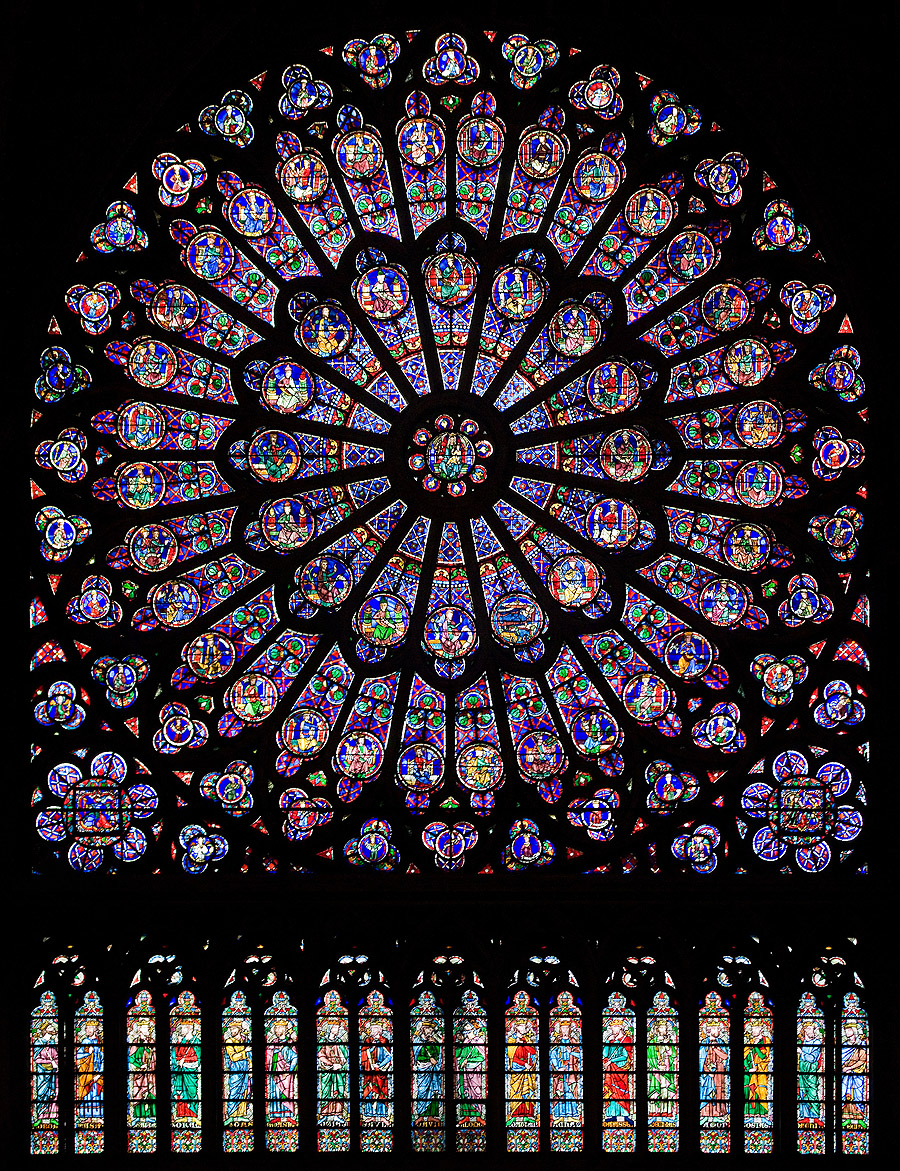

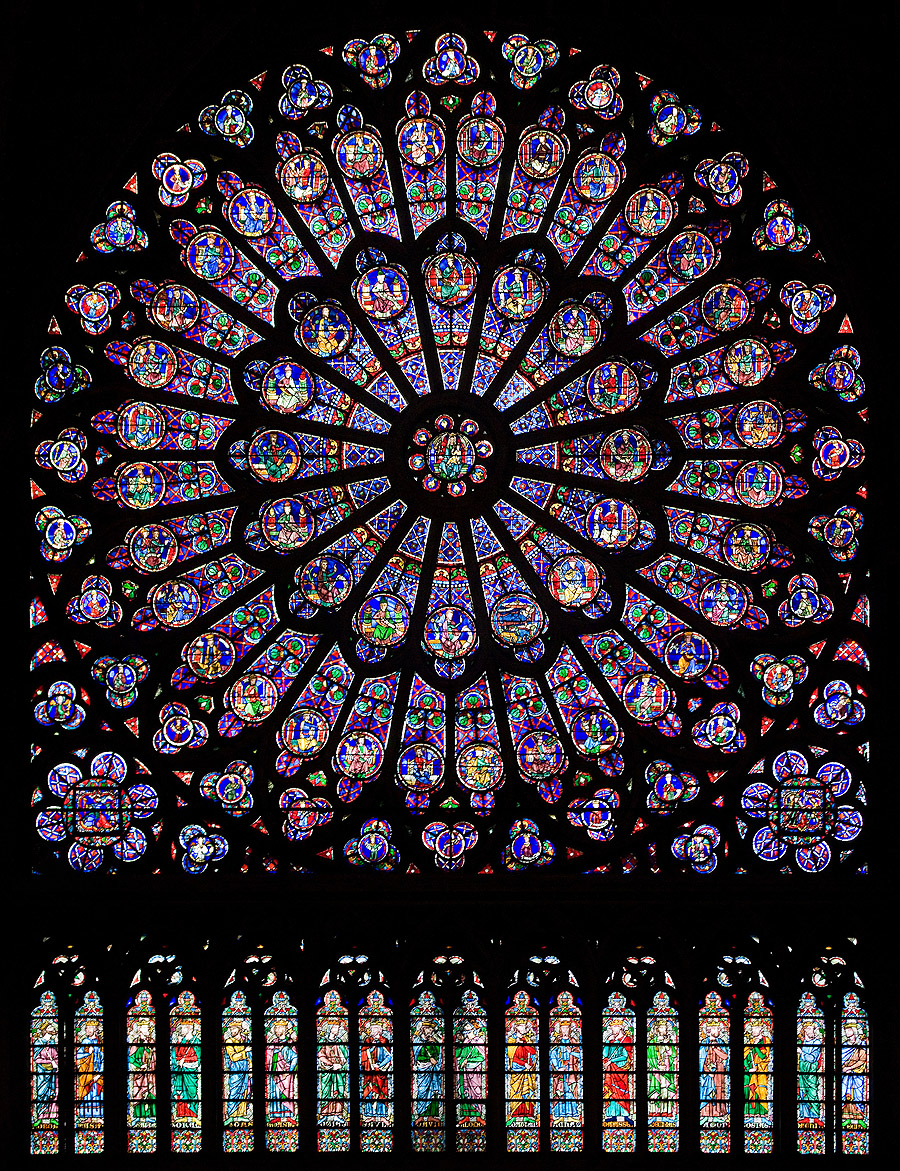

Feast Day of the Resurrection of Our Lord

North Rose Window of Notre-Dame de Paris

Photo by Julie Anne Workman. License: CC-BY-SA 3.0. Click on image.

Permanent link to this entry

|

2019

April

20

|

When the rubberized grip on a camera or binoculars deteriorates

Many plastic objects made about 15 or 20 years ago are deteriorating.

This particularly afflicts the grips on cameras, binoculars, and other

objects (umbrellas and

this fellow's light saber).

Getting two of my older cameras out recently, I came across two cases of this, of

different kinds. Here's how I dealt with them.

Grip remains hard but becomes grayish and looks powdery:

In this case the

plastic has dried up (lost its plasticizers). Like asphalt, many plastics are

a mixture of a hard substance and a lighter, oilier substance that keeps it supple.

The cure? STP

"Son Of A Gun" Protectant applied very lightly and rubbed off.

I did this a couple of times. If you apply too much, you'll leave the surface slippery,

but you can clean it off. This product is less oily than Armor All.

A German product called Sonax

Gummi-Pfleger is also highly recommended.

What you do is clean the surface, swab on the Gummi-Pfleger with a Q-tip, leave

it there a few minutes, and then wipe off all that has not soaked in. I have not

tried this.

Grip becomes sticky, perhaps very sticky:

This is a different problem and is what afflicted my Canon 300D.

Applying a bit of "Son Of A Gun" made it worse. So did all other

attempts to clean the surface. I was vexed.

But then, with the help of the video about a light saber that I linked to earlier, I

figured it out. What you have is a thin black rubber coating on top of black

plastic. That coating is gone (I'm told it has un-vulcanized itself)

and needs to be taken off. Then you'll have

clean plastic, shinier than before, good as new except that it has no rubber coating.

How do you do that? You need a microfiber cloth that you can sacrifice; I suggest a

cheap eyeglass cleaning cloth. (By now all of us have a few of those; they come free with

sunglasses, etc.) Wet the cloth generously with isopropyl alcohol and scrub.

The sticky coating will come right off, onto the cloth. The process looks messy but

very quickly gives a clean result. Some people scrub with a brush or a plastic spudger;

I did a bit of scraping with a fingernail. The cloth will have lots of black rubber in

it which you can't wash out, so throw it away.

Now I have a Canon 300D that is shiny in places where it used to be matte, but that's a lot

better than having it as sticky as Scotch tape!

[Update] I know from experience with an umbrella handle that if you just leave a sticky

item alone in a warm, dry place for several months, it can re-harden. Maybe something

evaporates out of it.

Permanent link to this entry

Tree

While putting the 40D through its paces, I stepped outside and made a bit of art.

Canon 40D (a camera I got in 2007 and will be using more, as I readjust my equipment

lineup), 40-mm f/2.8 "pancake" lens.

Permanent link to this entry

|

2019

April

18

|

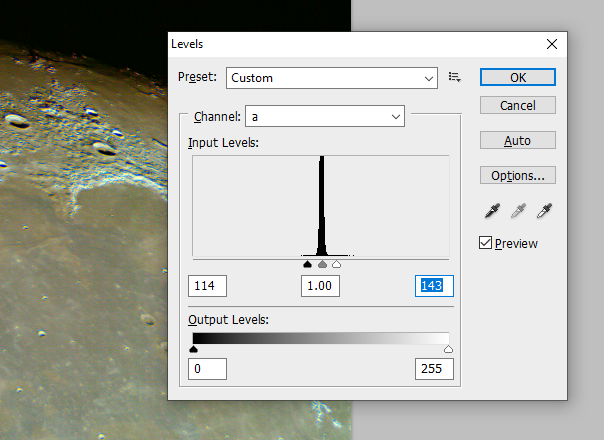

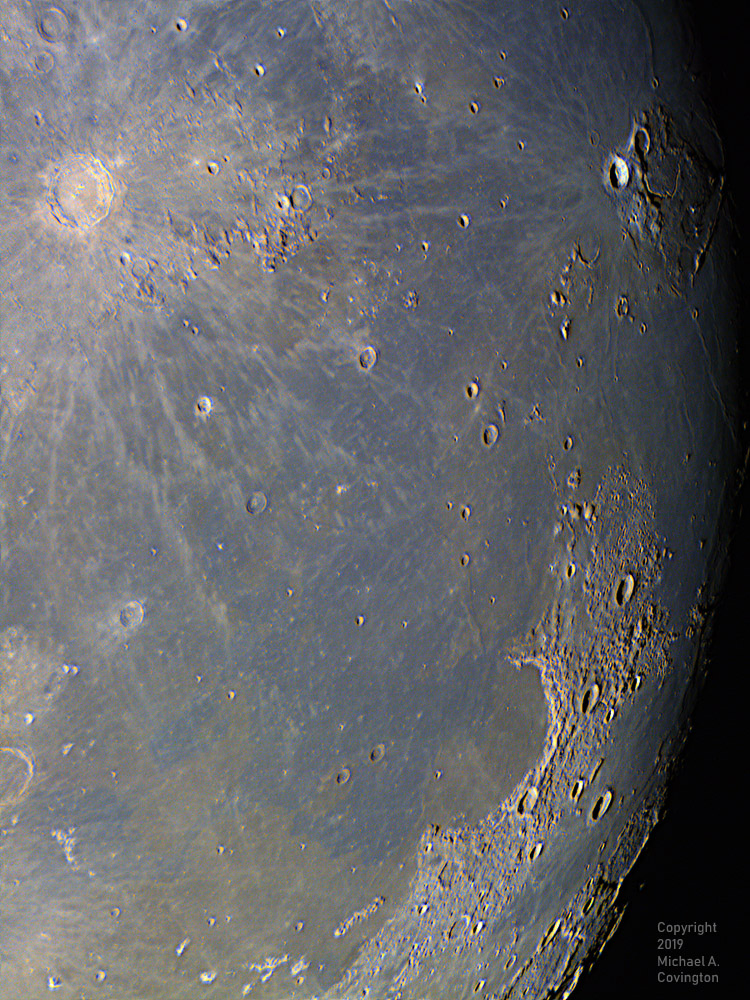

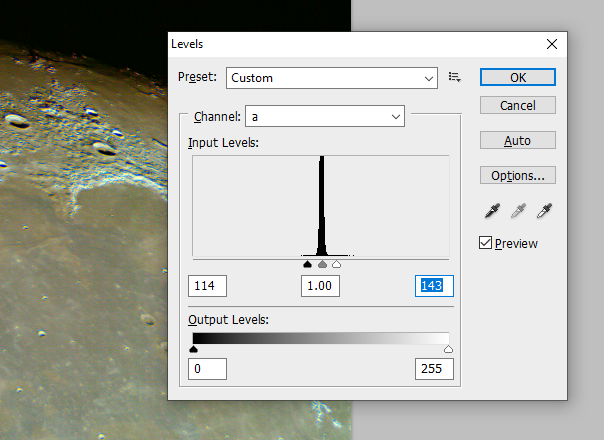

Lunar colors, done better

This is the same color image of a wide area of the moon (see yesterday's entry below).

The difference is that the colors were strengthened in a different way.

I thank Richard Berry for the technique.

It is done in Photoshop as follows:

- Convert the image from RGB color to Lab (L*a*b) color. (Image, Mode.)

- Press Ctrl-L. Now your channels are not R, G, and B, but rather L, a, and b, where

the latter two are dimensions of hue.

- In the a channel, bring in the side marks until they are close to the histogram

and centered on it, like this:

- Do the same to the b channel.

- Convert back to RGB and save the image file.

The advantage of Lab color is that you can adjust brightness and the two

dimensions of color completely independently of each other.

Permanent link to this entry

|

2019

April

17

(Extra)

|

Should people buy unusual, expensive things?

Moral and spiritual aspects of an astronomy equipment purchase

This is mainly for my fellow Christians, but others will find it of interest

if they have similar moral principles.

It is written from the perspective of the Christian duty to use material possessions

responsibly for the glory of God, rather than for pure self-indulgence.

An amateur astronomer who is a Christian just asked me for spiritual advice, and

I'd like to run his question and my answer by all of you, while respecting his privacy.

His question was whether it was irresponsible for him to spend $6000 on a specialized piece of equipment.

Important note: He indicated, and from here on I will assume,

that he could afford it without neglecting other duties, that he would get lots of use out of it,

and that he wasn't buying it to impress others, but rather because he

really had a use for it and a good technical understanding of how it would help him.

My reply was: Do not judge your decision by whether other people spend money the same way.

Most people don't buy astronomy equipment at all.

That's one reason it seems to be a strange thing to be spending money on.

If you spent $6000 more than you needed to on a car or a house, nobody would bat an eye

and you would probably never even question it yourself.

Also, if it is legitimate to spend time on something, it is also

legitimate to spend money on it, within reason.

Appreciating God's universe is at least as legitimate as watching baseball games

or reading novels or going to the beach. The difference is that relatively few

people are given the talents and inclination to appreciate the universe the way you do.

Also, this is $6000 for an instrument that will last ten years or more and be used very

frequently during that time.

So my advice is that the purchase is legitimate. But a second part of the question had

to do with the fact that similar equipment that would do the job about half as well, or less,

can be had for a lot less than $6000. He could limp along, so to speak, for $1000.

So is it wasteful to buy the best? Knowing a good bit about this equipment,

I don't think so. It's very comparable to the difference between a $1000 car and a $6000 car.

The second one is a lot more serviceable and will last you longer.

Finally, he asked if he should have used the $6000 to help people (give to the needy)

instead. Well, that's between you and God, and if that's what He's telling you to do, I won't stand in the way!

But when you buy the $6000 instrument you are not throwing the money away — you are giving

people employment, and giving them the satisfaction of making a beautiful and precise instrument,

and they in turn are giving other people employment when they spend their money.

We Christians do not believe we are obligated to give all our money to the poor — that

would just make us poor too. The biggest way we help the poor is by supporting an economy that

gives people jobs. From that perspective, spending money on personal interests (that are not

actually harmful or destructive) is a good, productive, socially beneficial thing to do.

We also don't want to go down the path of saying that precision instruments shouldn't exist —

which is what you're saying if you claim no one should buy them.

And we certainly don't want to be small-minded people who say "this is frivolous" merely because

they are not familiar with it and don't know what it's good for.

So I said, given the "important note" above, that as far as I could tell,

he was doing the right thing and should be at peace about it.

In subsequent conversations on two forums, many wise people agreed with me, and no one

raised a serious objection.

For the astronomers in the audience, I should add that the equipment was not a telescope but

an equatorial mount, motorized and computerized to track the stars. That is actually the part of

our apparatus that is undergoing the fastest technical progress these days, and also the part where

spending more money buys the biggest increase in performance. Some of us make a hobby of finding low-cost

ways to do things, and others, including my correspondent, want to have something reliable and use

it regularly without further ado.

Permanent link to this entry

|

2019

April

17

|

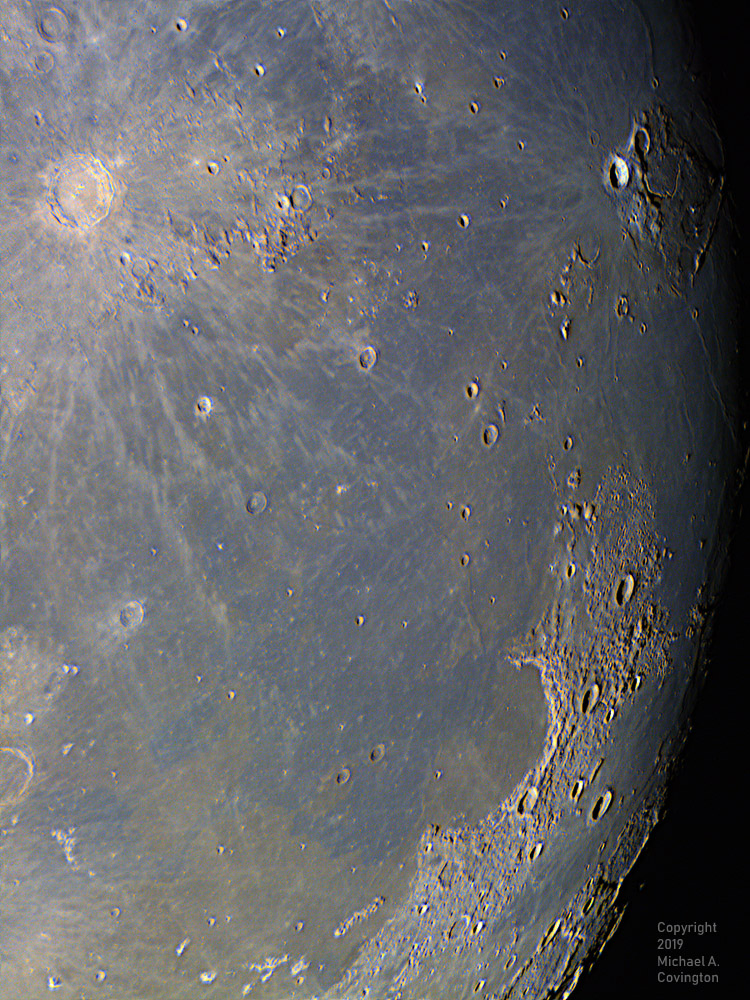

Lunar colors

I didn't have time to do much astronomy last night but didn't want to come away

completely empty-handed from one of our rare clear nights.

So I put my Celestron 5 (vintage 1980) on its pier and used my ASI120MC-S camera

to capture about 3400 video frames of the western part of the

moon, then stacked and sharpened them.

The reason the colors are so vivid is that I strengthened them with PixInsight.

They are real — they're just stronger in the picture than in real life.

You can see that different parts of the surface of the moon are made of slightly

different minerals.

Permanent link to this entry

|

2019

April

16

|

Pronunciation of connoisseur

While digging around in the French language yesterday I learned that the

word we spell connoisseur is spelled and pronounced connaisseur in French

and has been pronounced that way for a long time, even before the spelling changed

from oi to ai.

So "con-nes-SUHR" is about right if you want to pronounce it in English, and anything

with an "oi" or "wa" sound is a hypercorrection.

Digging deeper, I learned that the sound /we/ (spelled oi) changed to /ε/ in French

a few centuries ago, but the oi spelling hung on.

This involved words like connoisseur, connoitre (our word "reconnoiter"),

etoit "was," and many others.

After a while the French changed the spellings of these words to ai.

Under other conditions — I'm not sure what — /we/ changed not

to /ε/ but to /wa/ and continued to be spelled oi.

An interesting pair of words is français / François. They are the same word

except that they went through those sound changes differently.

I don't know

whether that's because it reached us via a different dialect or because proper names

often escape changes that affect the rest of the language; people expect them to be

more old-fashioned.

Permanent link to this entry

Another DSLR on the way

On short notice I found and snapped up a good deal on a Nikon D5500 that has had its filter

modified to transmit hydrogen-alpha, so I can photograph nebulae with it.

I'll test it as soon as it arrives.

I have two minds about Nikon versus Canon DSLRs at the moment.

Canon has a much more consistent system (same lens mount since the 1990s) and Canons all

have "live view shooting" (vibrationless electronic first curtain shutter).

But Nikon sensors perform better at present. (Canon is catching up.)

And on the third hand, Nikon's raw images are considerably preprocessed, not raw.

Fortunately the preprocessing is of a kind that benefits astrophotography.

So as I continue to straddle the Canon-Nikon fence, another Nikon is joining my fleet,

for now. More news soon.

Permanent link to this entry

|

2019

April

15

|

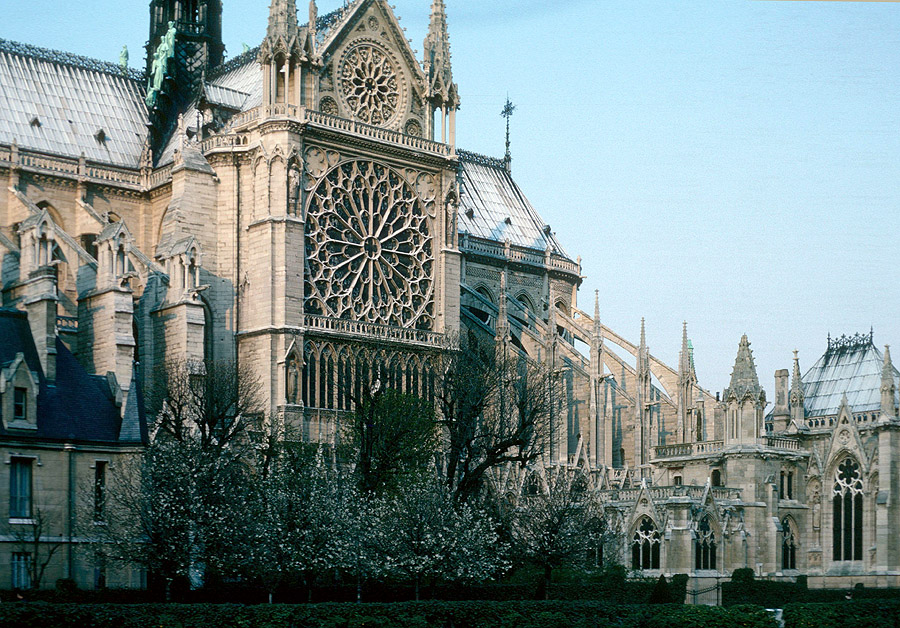

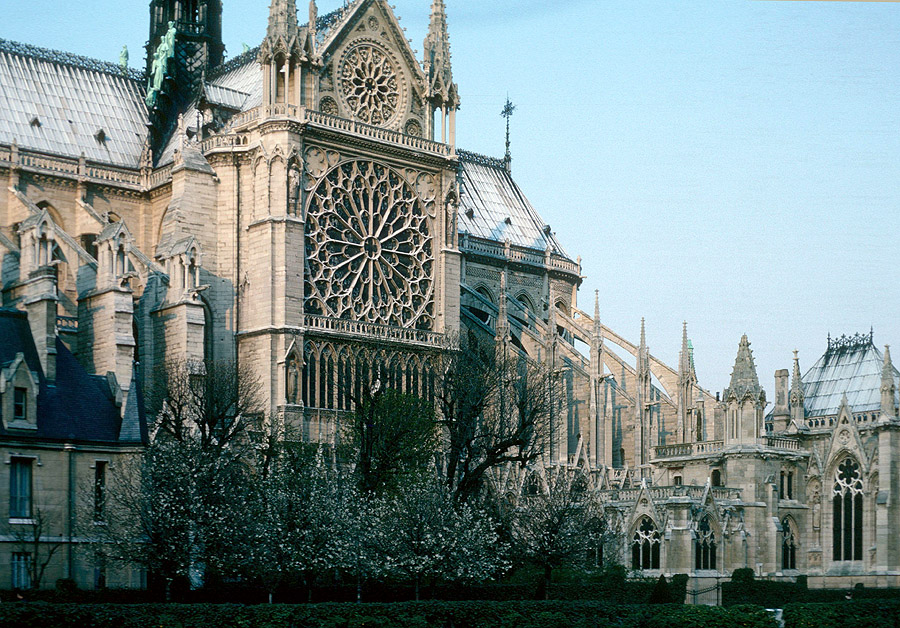

Cathédrale Notre-Dame de Paris

Nous pleurons avec le monde entier l'incendie tragique qui a détruit une grande partie de cette cathédrale aujourd'hui.

(Photo de ma première visite en 1978, Kodachrome 64, Olympus OM-1, 50/1.8 Zuiko,

exposition non enregistrée. Perspective corrigée avec Photoshop.)

Permanent link to this entry

|

2019

April

14

|

Why are the edges of this picture reddish?

This is my picture of Markarian's Chain during processing. Notice that the edges,

especially the bottom and the right edge, are lighter than the middle, and somewhat reddish. Why?

First of all, the brightness at the lower left is probably a real gradient in the

sky. There was moonlight present. Also, that part of the field was closer to the

city lights of Athens.

Second, this picture has been flat-fielded. That is, it has been calibrated against

a supposedly matching picture, taken with the same lens and camera on the same evening,

of a flat light box. That compensates for three things: (1) minor differences in sensitivity

between pixels; (2) dust specks on the filter in front of the sensor, if any; and (3) the

tendency of all lenses to illuminate the edges and corners slightly less than the center.

Without boring you with the details, let me say that I confirmed that the flat-field

calibration (including darks and flat darks) was done correctly by comparing three different pieces

of software. Note also that lens vignetting, item (3) in the list I just gave, would be circularly

symmetrical arould the center rather than lined up in narrow rows along the edges.

In fact, there was quite perceptible lens vignetting, and the flat-field calibration

removed it.

The problem occurred only with my Canon 60Da, which has a special filter that makes it sensitive

to longer wavelengths of light. It did not occur with my Nikon D5300.

I was almost convinced the sensor in the camera had deteriorated. Such deterioration might easily

occur at the edges, which might be less well attached to the heat sink, or the filter, or something.

But I kept experimenting. Fortunately, I had kept the camera attached to the lens, with no changes

to anything, when I brought it in and put it down on the table. So I was able to perform

more experiments on subsequent days, trying various ways of taking flat fields and re-doing the

calibration.

My light source for the original flats was a small "Visual Plus" light box designed for

viewing slides and negatives. I must have had it for 15 years or more. Repeating the flat-fielding

with the same light source reproduced the problem.

But when I held up the camera and used the daytime sky as a light source — with several

layers of handkerchief in front of the lens to dim the light — the problem went away.

There was other unevenness, but not the original problem.

Then, under more controlled conditions, I used my new

AGPtek light box.

Problem gone!

Here is how much better it got:

You can still see a slight difference between the center and the edges, perhaps caused by a smaller

but similar illumination problem, or possibly a nonlinearity in the sensor itself. But it's an

order of magnitude better than before.

Conclusion? The old light box does not diffuse the longer wavelengths of light very well.

I can't see this with my eyes, but it must be what's going on. It supplies diffuse light at

most visible wavelengths, but at the long-wavelength end of the spectrum, the light must not be

evenly diffused across the panel, and thus, to some extent shadows are cast at the edges of the sensor.

That makes the flat-field image too dark there, resulting in too much brightening when the correction

is performed. And only at the red end of the spectrum.

The other thing I learned is that there is no such thing as a perfect flat field.

Shutter speeds faster than about 1/100 second show uneven shutter motion;

no light source is perfectly even; and so on.

But most flat fields are better than the ones that were producing the red borders.

[Update:]

It turned out that the battery in the original light box was very weak, and this may

have affected the color and even the diffusion of the light.

But even with a fresh battery, the light box does not emit light equally in all relevant

directions. I'm switching to the new light box to stay.

Permanent link to this entry

|

2019

April

11

|

Markarian's Chain and M87

Last night I needed to continue testing how well my newly overhauled AVX mount will

track the stars without guiding corrections, but the crescent moon was in the sky.

I decided to photograph M87, the galaxy whose central black hole was

imaged by the Event Horizon Telescope project

(the image was released yesterday after 2 years of processing).

M87 is the brightest galaxy in the picture, at lower left of center.

A meteor can be seen faintly to the upper left of M87.

The curved row of galaxies across the middle of the picture is known

as Markarian's Chain.

Stack of eight 3-minute exposures, Nikon 180/2.8 ED AI lens at f/4, Canon 60Da.

The very dark background is due to heavy processing that was needed to

overcome moonlight.

Permanent link to this entry

|

2019

April

10

|

Mathematics

Arithmetic tells you how to add 123 + 456.

Mathematics tells you whether to add 123 + 456.

Permanent link to this entry

Probabilities are not propensities

The philosopher Paul Humphreys points out that probabilities cannot all be

interpreted as propensities (physical tendencies), though some can.

The reason? They work in both directions.

To take his example, if smoking causes cancer, we can determine not only the

probability that a smoker will get cancer, but also the probability that a case of

cancer was caused by smoking. (Bayes' Theorem and some simpler principles connect the two.)

When smoking causes cancer, it's a physical effect, so smoking has a physical propensity

to cause cancer. But cancer does not cause smoking, so the second probability that

I mentioned cannot be a physical propensity.

Permanent link to this entry

Trying to wipe Jesus off the map?

Some atheist writers are now trying to refloat the old notion that "Jesus never existed."

Click here for a review.

I think this is as silly as it ever was. Somebody founded Christianity, and why shouldn't his name

be what people have always said it was? You can question his divinity, miracles, etc., all you want

without trying to wipe out the man himself. Nobody tries to wipe out Mohammed, Buddha, or Zoroaster.

The underlying question is how much to trust indirect evidence from ancient times.

Like it or not, we don't have the birth certificates of people who lived in ancient times,

and many of them were not written about (in surviving materials) during their lifetimes

(although Jesus comes closer than most).

If you set the bar such that the evidence for Jesus is considered insufficient, have you thought about

whom else you might also lose? Do you still get to believe in Socrates? Plato? Charlemagne?

Where does it stop?

And underlying that is the question of how much to respect the honesty and intelligence

of people who lived before your own time.

A century ago, it was fashionable to be extremely skeptical about everything ancient.

My sense is that expert opinion has swung around, and we now realize that people in the

ancient world knew more about the ancient world than we do.

Permanent link to this entry

|

2019

April

7

|

What's going on with artificial intelligence,

and is it dangerous?

All of a sudden, artificial intelligence is a hot topic in the news headlines.

As someone who has spent a while in this field,

I'm often asked my opinion.

As AI researchers go, I'm a bit of a skeptic. Here are some key points as I see them.

- There is no sharp line between artificial intelligence (AI) and other uses of computers.

AI is not a specific new invention or breakthrough.

- The reason for the boom in AI right now is mainly availability of information and computer power.

Computers have information on a scale that they never had before.

Older techniques are finally productive on a large scale.

- Machines are not conscious, and there is no known technical path to making them so.

- The biggest risk is that people are entrusting too much of their lives

to automatic systems that they do not understand.

What is artificial intelligence?

I sometimes sum it up by saying, "AI is about half a dozen different things, and some

of them are real."

To be a little clearer, AI is the use of computers to do things that normally are thought of

as requiring human intelligence. One useful criterion is that if you know exactly how

something is done, without having to study human performance, then it's computer programming.

If you don't know how it's done, but you can study how humans do it and model the process

somehow, it's AI.

Another criterion is that AI often lives with imperfection. Results are often somewhat

approximate or uncertain; the solution to a problem that the computer finds may not be the

best solution, though it is usually close; and so on. But this doesn't really distinguish

AI from various kinds of mathematical and statistical modeling.

AI originated at a conference at Dartmouth in 1956. At the time, brain science was in a

primitive state — many experts thought the human was a huge, fast, but simple

stimulus-response machine — and at the same time, they were beginning to realize the

power of computers, which, to them, actually seemed more sophisticated than the human brain.

To a striking extent, the future of AI was already foreseen at that conference.

But there was confidence that the intelligence of machines would grow quickly and become

more and more like human intelligence; all we needed was technical progress.

By 1970 it was clear that the dream was not coming true, and AI started to have ups and downs.

Two striking "ups" were around 1986 and around right now. Both, I think, were driven by the

sudden availablility of more computer power. Minicomputers and PCs drove the first one; the Internet

and the computerization of everyday life are driving the second one.

AI comprises several different technical areas. The most important ones at present are:

- Automatic recognition of patterns in data (called "machine learning");

- Recognition of objects in pictures (computer vision);

- Processing information given in human languages (natural language processing; includes

language translation, gathering data from English-language input, speech synthesis and

recognition; and even finding grammar errors);

- Robotics (where the robot responds to its environment and does not just execute

preplanned motions).

My specialties are the first and third ones, and I'm doing plenty of useful consulting work

for industry right now. Let no one deny that AI has given us a lot of good things.

It enables computers to do very useful things with data.

Crucially, the greater power we are getting from AI nowadays is due to better computers

and more information stored in them, not primarily to AI breakthroughs, though there has

certainly been progress.

Fifty years ago, nobody could run a pattern recognizer on your shopping habits because

the information wasn't available. Many merchants didn't use computers at all. Those that did,

stored no more information that absolutely necessary because storage was expensive, and anyhow

their computers were all separate. Today, all business is computerized and the Internet ties

all the computers together.

We also have much more powerful computers. In the 1970s, most universities didn't do AI experiments

because they required so much computer time.

A day of serious AI work would tie up a computer that could have done hundreds if not thousands

of statistical analyses for medical or agricultural research.

Nowadays you can outfit an AI lab at Wal-Mart. (Well, Office Depot or Newegg if you want to aim a little

higher.) I do most of my work on PCs that are not especially high-end, although rented Azure

servers do come into it.

Is the singularity coming?

"The singularity" is an event predicted by Ray Kurzweil, a time when computers will

be vastly more intelligent than human beings and will take over most of our intellectual tasks

— essentially, will take over the universe as our evolutionary descendants.

Kurzweil's predictions struck me as wildly unrealistic. He assumed that inexorable exponential

growth will somehow get us past all obstacles, even the laws of physics.

He predicted in 2005 that by 2020, $1,000 would buy a computer comparable in power to the human brain,

with an effective clock speed (perhaps achieved by parallelism) of 10,000,000 GHz and

1000 gigabytes of RAM.

Well, it's almost 2020, and a big computer today is more like 3 GHz and 64 GB. Not there yet.

And anyway, what do you mean, "a computer comparable in power to the human brain"?

That smacks of behaviorist brain science of the 1950s.

The one thing we're sure of today is that we don't know how the human brain works.

Even without Kurzweil's Singularity, though, are the machines going to take over?

Is the time near when computers will do nearly all of mankind's intellectual work and will

effectively run the world?

I don't think so. If you actually look back at

early AI literature (not science fiction), you see that

AI today is not much different from what the first experimenters envisioned

in 1956 and although computers will continue to become dramatically more useful, humans will

continue to use them as tools, not as replacements for themselves.

Where the real danger lies

The real risk, as experience has already demonstrated, is that

people are too eager to trust machines, including

machines they don't understand.

We've been through this before. In the 1950s, computers were often called

"electronic brains" or "thinking machines," and lay journalists asked —

with at least some seriousness — what the machines were thinking about

when they weren't busy.

The term "artificial intelligence" makes some people today imagine that the

machines are conscious, as does the term "neural network" (a kind of pattern recognizer

not particularly related to the brain, despite its name).

We don't know how to make a machine conscious.

However, we know how to fool people into thinking a machine is conscious.

Weizenbaum did that in 1966, with

ELIZA,

a computerized counselor that would see you type words like "mother" and ask

questions like, "Can you tell me more about your mother?"

People were sure it really understood their deepest thoughts.

More recently,

Barbara

Grosz has warned us about the damage that could be done by

similar tricks in computerized toys for children.

A talking doll could teach a child to behave in very unreasonable ways —

or even extract private information and send it to a third party.

And I haven't even gotten to the issue of entrusting important

decisions to machines. I'll return to matters of AI and ethics in another entry soon.

Permanent link to this entry

|

2019

April

6

|

Tycho, 2003?—2019

Our family has lost one of its most delightfully eccentric members.

Tycho

the miniature pinscher,

Cathy's dog since 2005, departed this life yesterday, April 5,

in Kentucky.

He was Cathy's faithful companion for

the past fourteen years and was at least a couple of

years old when she adopted him. He had a long life.

He will be missed.

Permanent link to this entry

|

2019

April

4

|

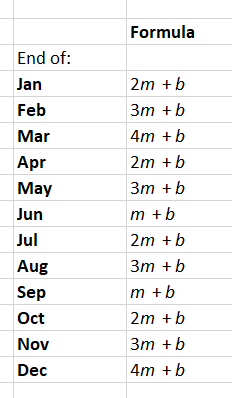

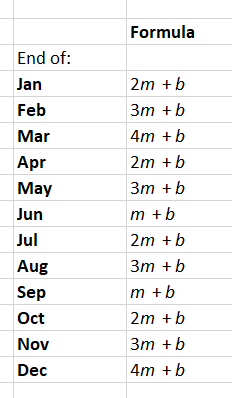

The art of paying quarterly tax payments

[Updated.]

One of the awkward parts of American income tax law is that when quarterly

estimated payments are needed (e.g., from small businesses), the IRS strongly

encourages payments of equal amounts at unequal intervals.

In what follows I'm going to give you some math and a spreadsheet to help

you make sure you always have enough money set aside, month by month, to make

the payments.

Quarterly

payments are due on unevenly spaced dates

(January 15, April 15, June 15 [gotcha!], and September 15).

That is, they are 3, 2, 3, and 4 months apart.

The quarterly payments don't have to be equal, but the IRS strongly encourages it.

If you want to pay equal amounts, the Estimated Tax Worksheet tells you how to

figure them, and there is a "safe harbor" provision — roughly speaking, if

this year's payments would have covered last year's taxes, you're not penalized for

underpaying even if your income goes up. You do have to pay the rest of the tax

when you file your yearly return, of course.

If your income fluctuates a lot, the alternative is to do a lot more accounting

(the Annualized Income Installment Method, or at least Form 2210) and figure the

tax for each period. In that case, the laws take into account the fact that the periods

aren't equal in length. But it's awkward.

This is discussed in IRS

Publication 505, and you can tell from reading it that the authors seem to have had

several contradictory systems in mind.

The usual advice is to put the appropriate percentage of your net business

income into a savings account as it comes in — typically 25% for

federal and 5% for state taxes — and you'll always have enough to make the

quarterly payments.

But if you want peace of mind, you can do some calculating to fit the

steady flow of money to the uneven dates of the payments.

Let m be one month's share of your tax payments (1/3 of a fixed

quarterly payment), and let b be whatever additional minimum balance

you need to maintain in the savings account.

Then at the end of each calendar month, your savings account

should contain this much money:

This assumes that in the months when you have a payment, it will go out

before any further money is set aside.

(Where did I get the formulas? From assuming that you need to have enough money to make

the June payment, which is the hard one; that is, at the end of May, you need 3m+b

in the bank.

Working both directions from there, the account balance goes up m every month

and down 3m when a payment goes out.)

You can click here

to download a spreadsheet

that calculates it all for you.

Fill in the three green boxes, and you're set.

Until your business income fluctuates!

(By the way, the numbers in the spreadsheet are not mine; they're a made-up example.

Also, I am not a tax professional and am simply sharing something I created

for my own use. If I am mistaken about anything, I'd like to know it — but be

prepared to substantiate what you're telling me, because lots of people "have heard"

something different from an unofficial source, and I've been studying this for some time.)

Permanent link to this entry

|

2019

April

3

|

A galaxy and a dwarf

I didn't expect much from this picture; in particular I didn't expect the blob

that you see below center. But there it is.

On the evening of April 2, the sky was somewhat murky, but I needed to continue

refining the autoguiding settings of the newly overhauled AVX, so I had my AT65EDQ

telescope out and took a couple of pictures of galaxies.

This is one, actually a stack of eleven 3-minute exposures (6.5-cm aperture, f/6.5,

Canon 60Da camera at ISO 800).

The edge-on galaxy NGC 3115 was my target, and as you can see, it's plainly visible,

though a larger telescope and higher magnification would have helped.

I was surprised, though, to see another glowing blob on the pictures.

It was not plotted in Stellarium (the online star atlas that I usually use),

but I found it using Aladin,

which designates it NGC 3115 DW1, a

dwarf galaxy that is a companion of NGC 3115.

It has also been called NGC

3115B and may have at one time been thought to be a globular cluster.

(Actually, it is a galaxy with globular clusters in it.)

Interstellarum Atlas shows it and labels it MCG-1-26-21,

its designation in the Воронцов-Вельяминов (Vorontsov-Velyaminov) Morphological Catalogue of Galaxies.

(Воронцов-Вельяминов is one person with a hyphenated name.)

There are several kinds of dwarf galaxies.

This one is the kind that resembles a large globular cluster.

In fact, there seems to be a continuum from globular clusters (on the outskirts of big galaxies)

to compact, spheroidal dwarf galaxies, although anything one might say about

classifying them has been disputed.

Following this up led me to a very interesting project to discover dwarf galaxies

with amateur telescopes. Amateur astronomy continues to rejoin cutting-edge science,

after having been left behind around 1970. It seems that amateurs' penchant for pretty

pictures of distant galaxies has led to exactly the right technology for finding

faint dwarf galaxies. If I stumbled on this one (not a new discovery, but not a well-known

object either) under poor conditions with a telescope

no bigger around than my fist, what about trying a remote-controlled 20-inch at a very dark site?

And that is exactly what the DGSAT Project

is aiming to do. I wish them success!

Permanent link to this entry

Stellarium is much improved

The latest release (0.19.0) of Stellarium, free star

and planet mapping software, has fixed the problems

that I mentioned during the past year, including

field-of-view indicators and

trouble computing the position of Jupiter's Great Red Spot.

I am using it and heartily recommend it.

Permanent link to this entry

Familiar galaxies in Leo

I know I'm repeating myself — I've taken much the same picture before —

but here is the famous Leo Triplet or Leo Triplets

(the word triplet can mean either a trio or a member of a trio).

Stack of ten 3-minute exposures from the same autoguider-testing session

as the image of NGC 3115 above.

Permanent link to this entry

|

2019

April

2

|

A star cluster and a planetary nebula

[Updated.]

The weather hasn't allowed me to do much astrophotography since

getting the AVX overhauled,

so I'm still in equipment-shakedown mode, trying to become

familiar with its performance, which is modestly improved.

I'm also changing my technique in various ways and will be doing more autoguiding.

My key conclusion from recent tests is that when periodic error is well controlled,

polar alignment becomes the limiting factor for unguided exposures.

So I'm starting to do guidescope-assisted polar alignment (which requires the computer

at the beginning of the session but not throughout it) and will probably end up

doing more autoguiding too.

(Numerical example: For a drift no greater than 3 arc-seconds in 2 minutes of time,

I need to polar-align within 6 arc-minutes of the pole. That is right at the limit

of what can be achieved with Celestron's ASPA [all-star polar alignment]. That is why

I now drift-align with a guidescope and PHD2 software when practical. And if I'm set

up for that, I might as well continue autoguiding, unless for some reason I don't want

to leave the computer connected.)

So it was last night, when — hurrying ahead of incoming clouds — I took a

series of autoguided 3-minute exposures of star cluster M46 and planetary nebula NGC 2438.

New measurements by the Gaia orbiting observatory show that the nebula is in front

of the cluster, less than half as far away. For a long time it was thought to be in the

cluster or even behind it.

Six of the exposures were stacked to make the picture you see above.

AT65EDQ refractor, Canon 60Da at ISO 800. You can see stars down to magnitude 16.5

if not fainter. Not bad for a telescope two and a half inches in diameter!

Permanent link to this entry

Short notes

Today is the 37th anniversary of my last day at Yale; I stayed an extra day to avoid

finishing my Ph.D. on April Fools' Day.

Major Daily Notebook entries are about to be written. I'm going to tackle artificial

intelligence ethics (a hot topic), and then an even hotter topic, which we'll get to.

Stay tuned!

Permanent link to this entry

|

|

|

This is a private web page,

not hosted or sponsored by the University of Georgia.

Copyright 2019 Michael A. Covington.

Caching by search engines is permitted.

To go to the latest entry every day, bookmark

http://www.covingtoninnovations.com/michael/blog/Default.asp

and if you get the previous month, tell your browser to refresh.

Portrait at top of page by Sharon Covington.

This web site has never collected personal information

and is not affected by GDPR.

Some older pages that contain Google Ads may use cookies to manage the rotation of ads.

No personal information is collected or stored by Covington Innovations, and never has been.

This web site is based and served entirely in the United States.

In compliance with U.S. FTC guidelines,

I am glad to point out that unless explicitly

indicated, I do not receive substantial payments, free merchandise, or other remuneration

for reviewing or mentioning products on this web site.

Any remuneration valued at more than about $10 will always be mentioned here,

and in any case my writing about products and dealers is always truthful.

Reviewed

products are usually things I purchased for my own use, or occasionally items

lent to me briefly by manufacturers and described as such.

I am an Amazon Associate, and almost all of my links to Amazon.com pay me a commission

if you make a purchase. This of course does not determine which items I recommend, since

I can get a commission on anything they sell.

|

|